We turn your prototypes into production-grade systems

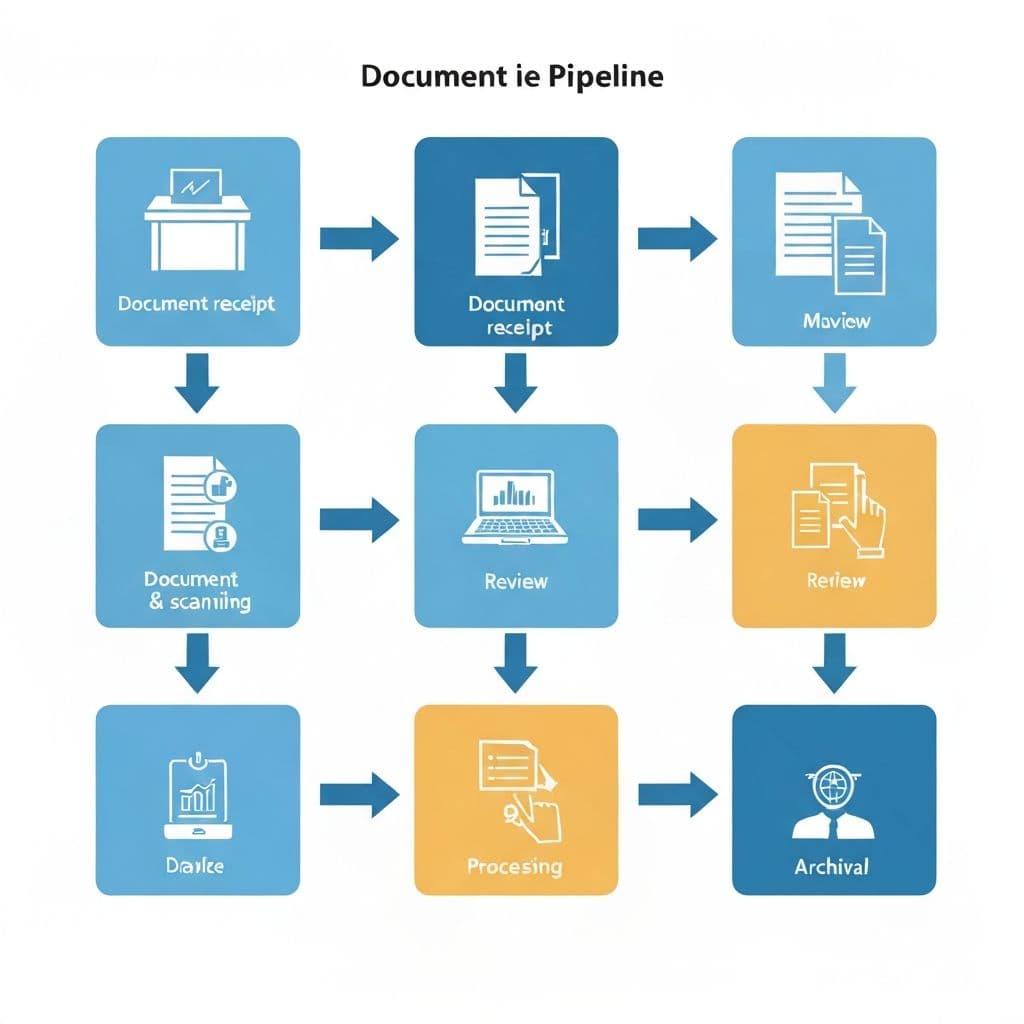

We build AI applications that handle lease abstraction, COI verification, and document processing—with source citations and human-in-the-loop review.

Our Evaluation Framework

Every AI application passes through three layers of validation before reaching production

Systematic Quality Measurement

Quantitative scores and structured reports track performance across your pipeline

Application-specific evaluations

Application-specific assessments measure what actually matters for your use case

Error Analysis and Continuous Monitoring

Automated guardrails catch drift and block bad outputs before they reach users

All Our Applications Pass Rigorous Evaluations

Evaluations are the foundation of reliable AI systems—measuring quality, catching errors, and enabling continuous improvement

Systematic Quality Measurements

Systematic measurements of quality in an LLM pipeline—producing quantitative scores or structured reports that help teams understand how well their AI application is performing.

Error Detection & Improvement

Tools for catching errors and enabling improvement—used as background monitors to detect drift, as guardrails to block bad outputs, or to label data for fine-tuning and identify failure cases.

Application-Specific Assessments

Application-specific assessments that measure what actually matters for your use case (like helpfulness or format adherence), rather than relying on generic benchmarks that may not reflect your requirements.

Get Started

Schedule a call to discuss your automation project